What Is GitHub Copilot?

Developed in collaboration with OpenAI and Microsoft, GitHub Copilot uses an AI model trained on millions of public GitHub repositories to offer real-time code suggestions as you type. It also generates code based on natural language prompts in a chat window or directly in the code editor.

In addition to helping you create new code, when used in VS Code, Copilot can explain and fix existing code, as well as summarize your changes and write commit messages for you.

Copilot is like an automated pair programmer that synthesizes the collective knowledge of the GitHub community and brings it to every individual developer.

At the same time, Copilot takes your existing code into account by analyzing the surrounding context in all opened code editor tabs.

Basic Copilot use cases include writing boilerplate code, scaffolding new projects, and generating unit tests.

Copilot can help with any languages that appear in public repositories, but it works best with languages that are most popular on GitHub, such as JavaScript, TypeScript, Python, Java, C#, C/C++, PHP, Shell, and Ruby.

There are several ways to launch Copilot inside your code editor (IDE):

- Simply start writing code and wait for code-completion suggestions to appear.

- Write a code comment describing what you want to do in a natural language.

- Enter a prompt in a chat window or a dedicated pop-up provided by the IDE extension that you’re using.

Why Do You Need to Ensure the Quality of AI-Generated Code?

Can generative AI ever be wrong? Aren’t GitHub Copilot and other AI tools supposed to write perfect code for you?

Unfortunately, no.

While generative AI tools can suggest how to write code to solve a certain task, they don’t make sure that it’s free of errors and vulnerabilities.

After all, they’re trained on existing code written by humans, and humans tend to make mistakes.

Ultimately, it’s your responsibility to double-check any code that AI generates for you.

When dealing with AI-generated code, you should apply the same critical review process that you would when you copy and paste code snippets from StackOverflow, review your own code, or cross-review your teammates.

AI-generated code may introduce bugs and vulnerabilities that were only recently discovered, contain semantic errors, use outdated language syntax, or follow formatting conventions that aren’t a good fit for your codebase.

Another factor at play with AI tools like GitHub Copilot is that they’re probabilistic, not deterministic.

They’re going to be right most of the time, but not all the time.

If you use the same prompt several times, you’re also going to get different results.

To ensure the best possible experience with generative AI tools like GitHub Copilot, you need thorough testing and code quality and vulnerability scanning tools.

Installing GitHub Copilot in VS Code

Let’s see what using GitHub Copilot is like in action.

This article shows GitHub Copilot in Visual Studio Code (VS Code), but you can use it with other IDEs, including most JetBrains IDEs and Microsoft Visual Studio.

If you already have a GitHub Copilot account and the Copilot extension installed in VS Code, feel free to skip this section.

Otherwise, first and foremost, make sure you have VS Code installed.

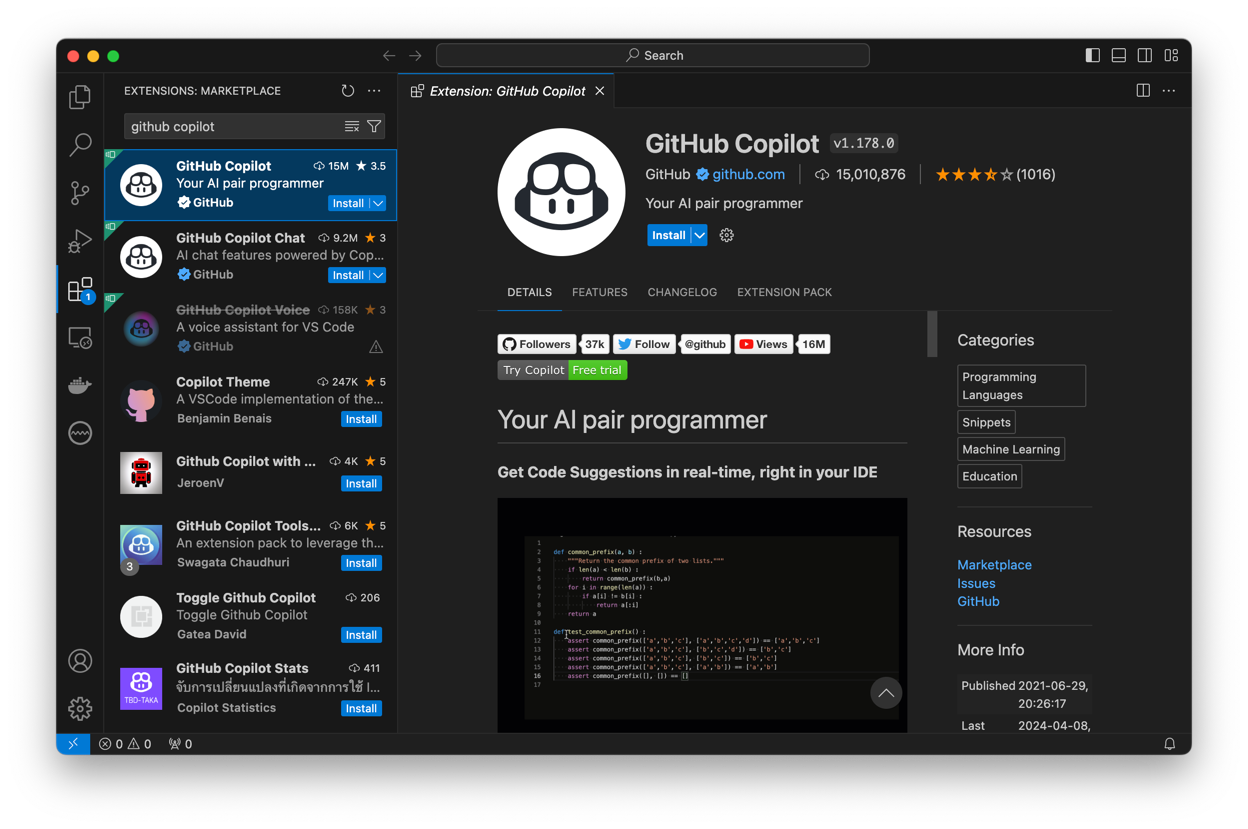

Once you’re inside VS Code, open the Extensions pane, search for the GitHub Copilot extension, and install it:

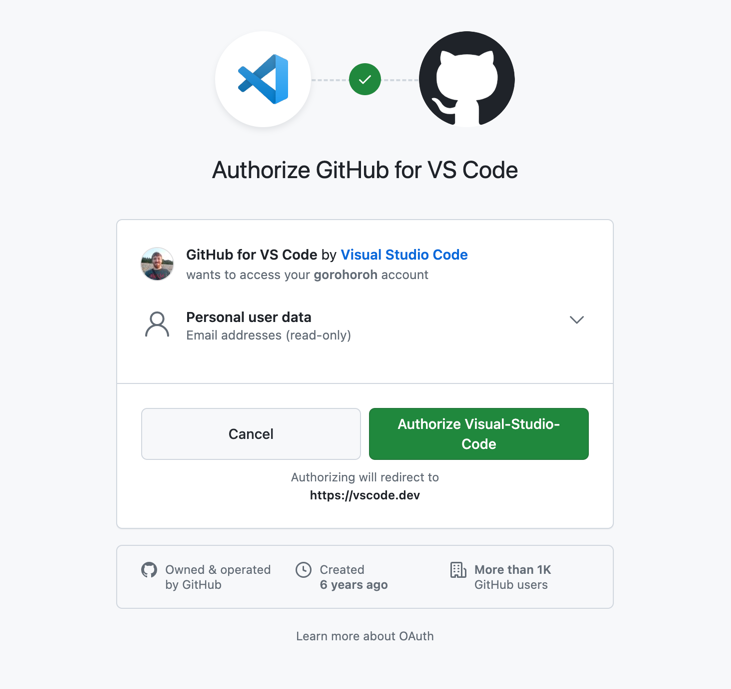

After installing the extension, you’ll be prompted to log in to GitHub from VS Code.

Click Sign in to GitHub, then allow the Copilot extension to sign in. Once GitHub opens in the browser, click Authorize Visual-Studio-Code.

GitHub Copilot isn’t available to GitHub users by default—you need to explicitly sign up for it.

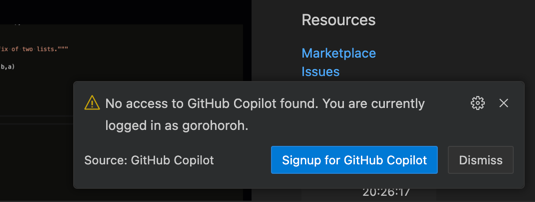

Unless you’re an existing Copilot user, when you return to VS Code, you’ll see a pop-up notification that says No access to GitHub Copilot found:

Click Signup for GitHub Copilot.

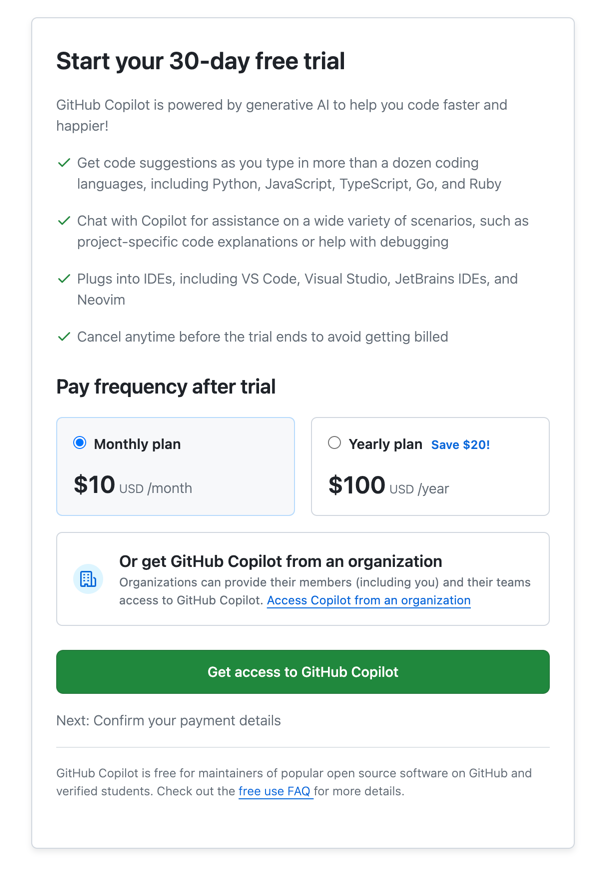

In the GitHub Copilot registration page that opens in the browser, click Get access to GitHub Pilot to start a thirty-day Copilot trial.

You’ll be asked to enter or confirm your payment details on GitHub.

When you’re done with this part, GitHub will let you set your basic preferences.

Set Suggestions matching public code to Allowed, then click Save and complete setup.

Finally, restart VS Code, and you should be all set to use GitHub Copilot.

Analyzing AI-Generated Code Quality in Real Time with SonarQube for IDE

To monitor the quality of your code as you type or as Copilot generates it, you can install SonarQube for IDE for VS Code.

SonarQube for IDE is a free advanced linter extension that helps maintain clean code, enabling you to prevent bugs and code smells even before you commit your changes.

To install SonarQube for IDE, open Visual Studio Code’s Extensions pane, search for SonarQube for IDE, and install the SonarQube for IDE extension by SonarSource when it shows up in search results.

Let’s see how SonarQube for IDE can help when you generate code using Copilot. If you want to follow along, here is a repo with the starter code.

Let’s say you’re editing an ASP.NET MVC web application written in C# that is a restaurant directory. Initially, you have a controller, HomeController.cs, that looks like this:

using Microsoft.AspNetCore.Authorization;

using Microsoft.AspNetCore.Mvc;

using OdeToFood.Models;

using OdeToFood.Services;

using OdeToFood.ViewModels;

namespace OdeToFood.Controllers

{

[Authorize]

public class HomeController : Controller

{

IRestaurantData _restaurantData;

public HomeController(IRestaurantData restaurantData)

{

_restaurantData = restaurantData;

}

[AllowAnonymous]

public IActionResult Index()

{

var model = new HomeIndexViewModel();

model.Restaurants = _restaurantData.GetAll();

return View(model);

}

[AllowAnonymous]

public IActionResult Details(int id)

{

var model = _restaurantData.Get(id);

if (model == null)

{

return RedirectToAction(nameof(Index));

}

return View(model);

}

[HttpGet]

public IActionResult Create()

{

return View();

}

[HttpPost]

[ValidateAntiForgeryToken]

public IActionResult Create(RestaurantEditModel model)

{

if (ModelState.IsValid)

{

var newRestaurant = new Restaurant();

newRestaurant.Name = model.Name;

newRestaurant.Cuisine = model.Cuisine;

newRestaurant = _restaurantData.Add(newRestaurant);

return RedirectToAction("Details", new {id = newRestaurant.Id});

}

return View();

}

}

}The controller has existing actions for listing all restaurants (Index) and showing detailed information about an existing restaurant (Details), as well as a pair of actions used to create a restaurant (Create).

Let’s use Copilot to generate code to edit an existing restaurant.

One way to trigger Copilot to suggest code is to write a comment describing your prompt, press Enter to start a new line, and wait for Copilot to start suggesting.

If you give Copilot a prompt that says “Create an action to edit a restaurant”, here’s what it may come up with:

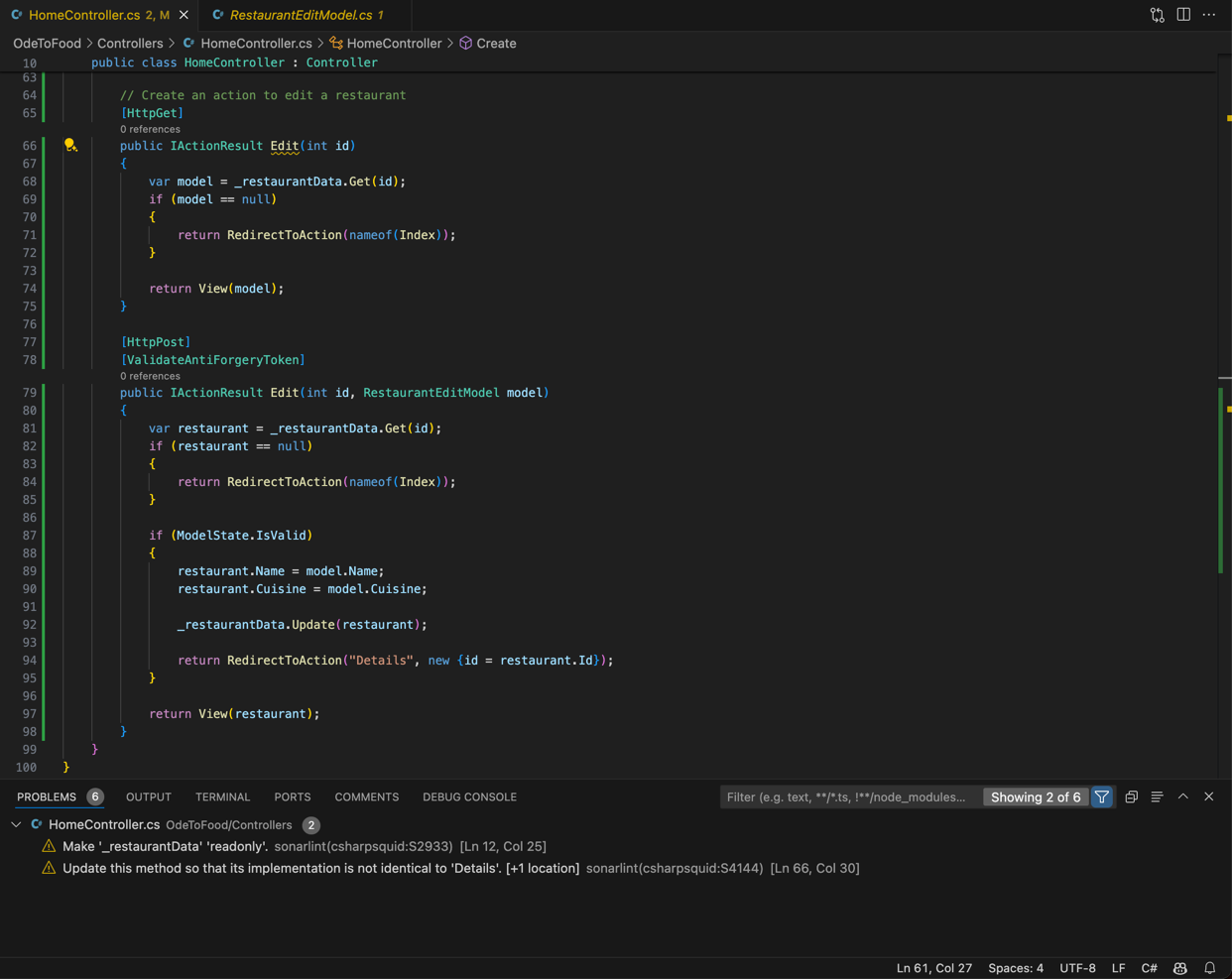

Copilot has generated two controller actions: Edit(int id) to display the restaurant edit view and Edit(int id, RestaurantEditModel model) to process updated restaurant data.

The generated code looks fine, but SonarQube for IDE has detected a potential problem related to the first method.

It’s indicated by a squiggle in the code editor as well as by a new item in the Problems view in the lower part of the VS Code workspace.

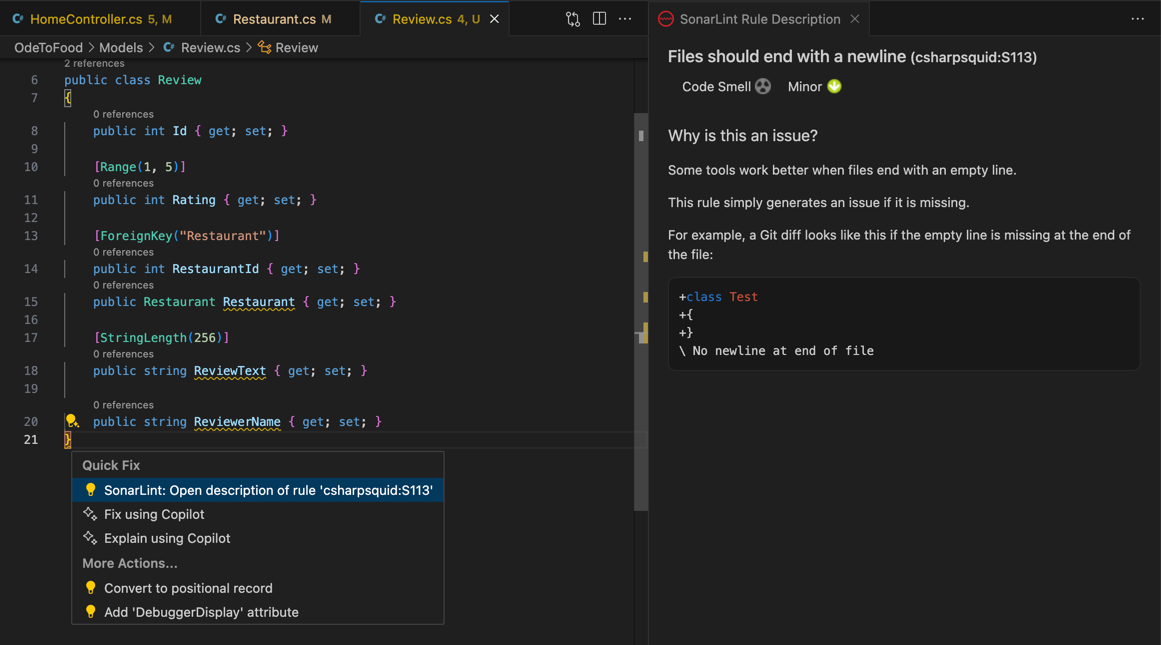

If you press Ctrl+. with the caret on the squiggle, you’ll see a pop-up menu that lets you see details about the problem, automated actions to fix it (if any), and any other contextually applicable actions provided by SonarQube for IDE, VS Code, or other VS Code plugins.

In this case, SonarQube for IDE has detected that the implementation of the new action is identical to an existing action, Details(int id).

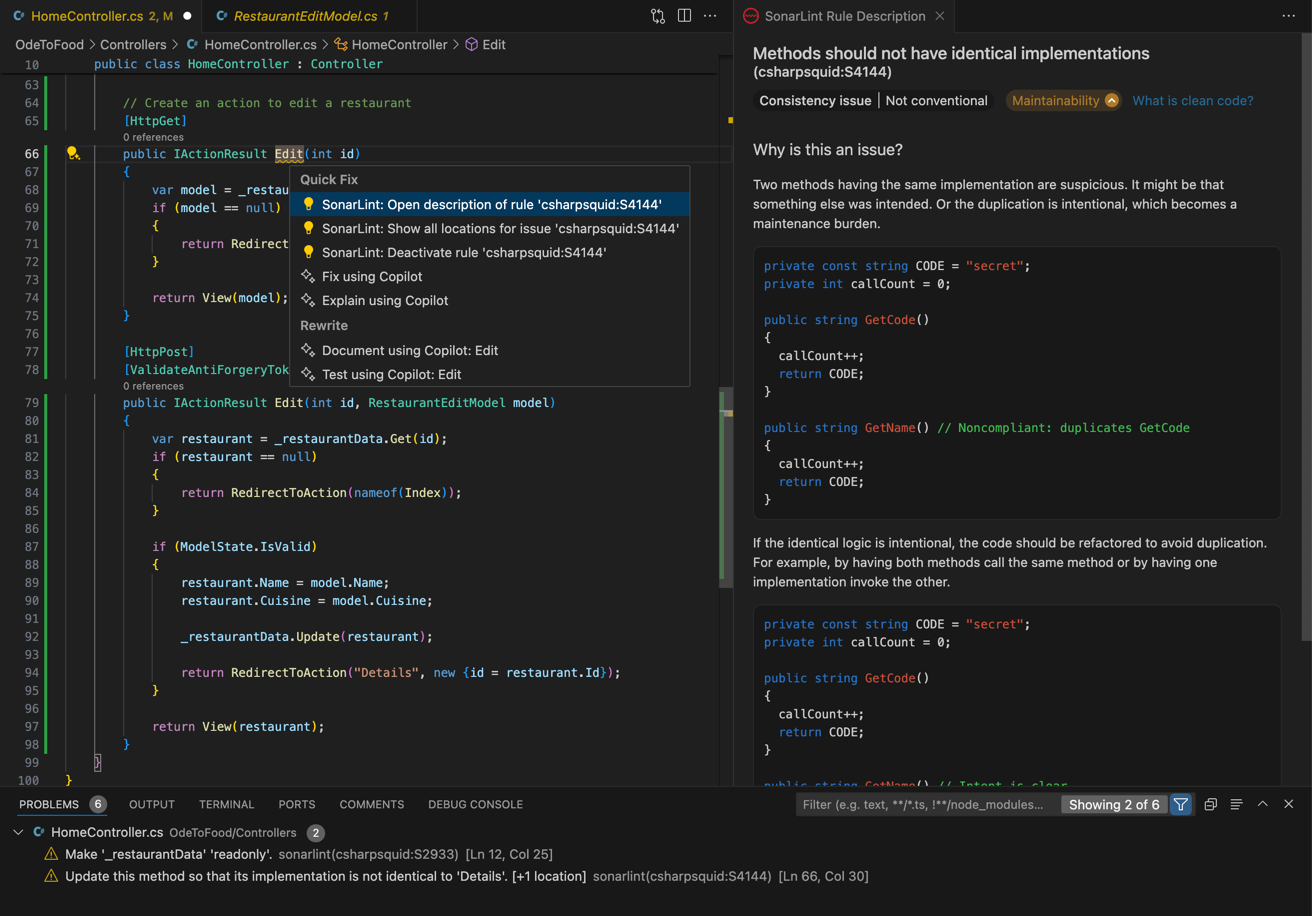

If you select SonarQube for IDE: Open description of rule csharpsquid:S4144, you can read the description of the applicable rule in SonarQube for IDE’s Rule Description pane.

SonarQube for IDE classifies this problem as a possible maintainability issue.

If duplication is intended, a refactoring that involves extracting reused code may be necessary.

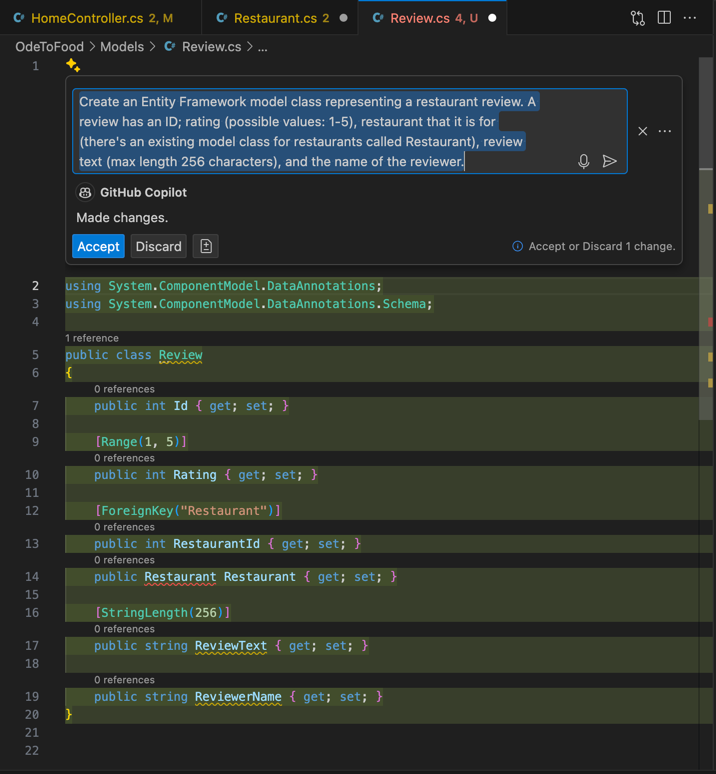

Let’s now ask Copilot to generate an Entity Framework model class defining a restaurant review.

Here’s a possible prompt that could work for this purpose and the code that Copilot might generate in response:

That’s some nice usage of Entity Framework model attributes by Copilot!

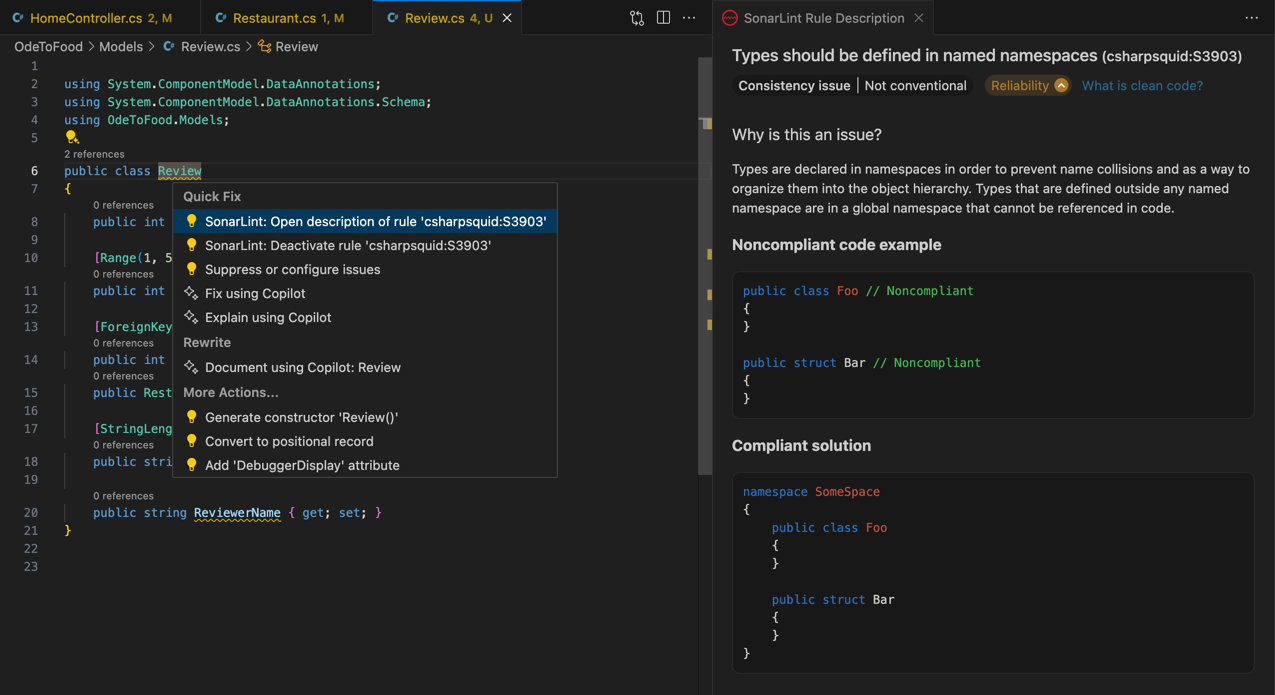

However, the generated code has a few problems.

Some of them are spotted by Microsoft’s C# language plugin, others by SonarQube for IDE.

For example, SonarQube for IDE notices that the generated class is not inside a namespace, which introduces the potential for name collision later on.

This problem can be solved easily by adding a namespace OdeToFood.Models; declaration to the Review.cs file.

Applying Team-Wide Code Quality Rules with SonarQube for IDE’s Connected Mode

Using SonarQube for IDE’s code inspections inside VS Code is nice in itself, but the code you write doesn’t usually exist in isolation. More often than not, you’re writing code that builds upon an existing codebase.

This codebase is probably maintained by a team that you’re part of, and the team might be using SonarQube Server or SonarQube Cloud to analyze all commits using a custom rule set.

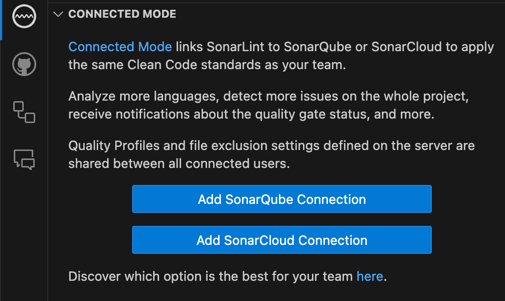

This is where you can take advantage of SonarQube for IDE’s Connected Mode.

It pairs SonarQube for IDE with your SonarQube Server or SonarQube Cloud instance, ensuring that both your local edits and the commits you push to the repository are inspected for code quality using the same analysis settings across your team.

To enable Connected Mode, open the SonarQube for IDE pane in VS Code and click Add SonarQube Server Connection or Add SonarQube Cloud Connection, depending on which of these tools you’re using for server-side code quality analysis:

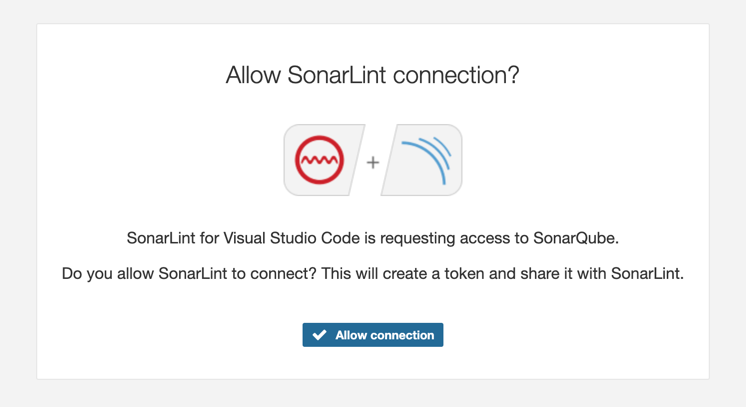

In the SonarQube Server Connection editor tab that opens, enter your SonarQube Server URL, and click Generate Token.

This will open your SonarQube Server instance, which will prompt you to allow connecting to your SonarQube for IDE plugin in VS Code. Click Allow connection:

When you return to VS Code, the SonarQube Server Connection pane should already include a new connection token that SonarQube Server has just generated.

Click Save Connection.

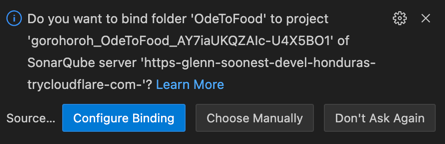

Finally, SonarQube for IDE will display a notification suggesting that you bind your editor workspace to SonarQube Server’s server project for your code repository. Click Configure Binding to complete the Connected Mode setup.

When Connected Mode is on and your SonarQube Server project uses an extended quality profile (aka rule set), you will see SonarQube for IDE display problems accordingly.

For example, if your SonarQube Server quality profile for C# requires adding an empty line at the end of every file, SonarQube for IDE will remind you to add one in the Review.cs file with the review model class that Copilot has generated:

Connected Mode also enables synchronization of customized rule descriptions and issue status (Accept/False Positive) between SonarQube Server (or SonarQube Cloud) and the SonarQube for IDE plugin in your IDE.

Summary

GitHub Copilot can help developers spend less time writing boilerplate code and focus on higher-order matters like clean architecture, scalability, and maintainability.

However, the code that Copilot generates is not guaranteed to be free of errors and vulnerabilities.

As you integrate Copilot and other generative AI services into your toolset, remember to keep your code quality gates working.

To help you with that, Sonar offers tools for continuous code quality analysis—including SonarQube for IDE, SonarQube Server, and SonarQube Cloud—that keep your code clean in the code editor and your CI/CD pipelines.

These tools help you detect and fix bugs and vulnerabilities in AI-generated code, as well as make sure that it adheres to your team’s code style and code quality standards.

This article was written by Jura Gorohovsky as part of the process of using GitHub Copilot Ensuring Quality AI-Generated Code.

- June 11, 2024