In the traditional SDLC, code authorship was generally pretty well understood. Anytime developers work on a code change, their tools automatically capture what changes occurred, when they were committed, and who made them. Up to this point, the assumption has been that whoever made the code change in the repository also wrote and tested the code.

Even if a developer finds a code snippet from an example online, they have to modify it to make it work within their own code. In order to do this, developers have to understand how the code works, essentially taking ownership of it and making it their own code in the process. This is a foundational element for creating trust in the code being managed.

The trust breakdown when AI generates code

When generative AI solutions are added to the mix, this trust can break down. AI coding assistants already understand much of the context within your codebase (including the variables, functions, and libraries already in use) so the code they generate already fits within the context of the overall software it’s meant to enhance. In this case, developers have to make fewer modifications for the AI-generated code to work.

This leads to more blind acceptance of AI-generated code without developers taking the time to understand what that code is doing or how it works. In this new world, we know who checked in the code, but the origin of the code and, therefore, its ownership become increasingly obscure.

Furthermore, if the organization doesn’t have any approved tools or processes for leveraging AI coding tools in the first place, devs may resort to “shadow IT” practices. They could use publicly available LLMs like ChatGPT, or download their own. They could use them to create code under their name that hasn’t been rigorously vetted, reviewed, or tested, further breaking down the trust in the validity or quality of the code. In using these public models, they could even leak privileged information externally, thereby creating security risks or inadvertently assisting IP theft. And there will be no visibility at all into what models were used, where they were used, or how well they performed.

On top of this, the GenAI world is rapidly evolving as new models are created. Use cases that work today may break tomorrow, and lack of visibility into which LLMs (including LLM versions) are in use will erode trust in the efficacy of the LLMs’ overall results.

Many enterprises already find this situation untenable, and they are looking for ways to solve it. But where do you start?

Build AI accountability for trusted code generation

The productivity benefits of using code generation LLMs to accelerate development and improve productivity are too enticing to ignore, but enterprises trying to build trustworthy solutions will need to retrofit (or potentially reimagine) their SDLC processes in order to accommodate them with the proper guardrails in place. At Sonar, we’re already having conversations with customers about the best way forward. Here are some of the steps you should consider – leading enterprises on this path are already taking many of these.

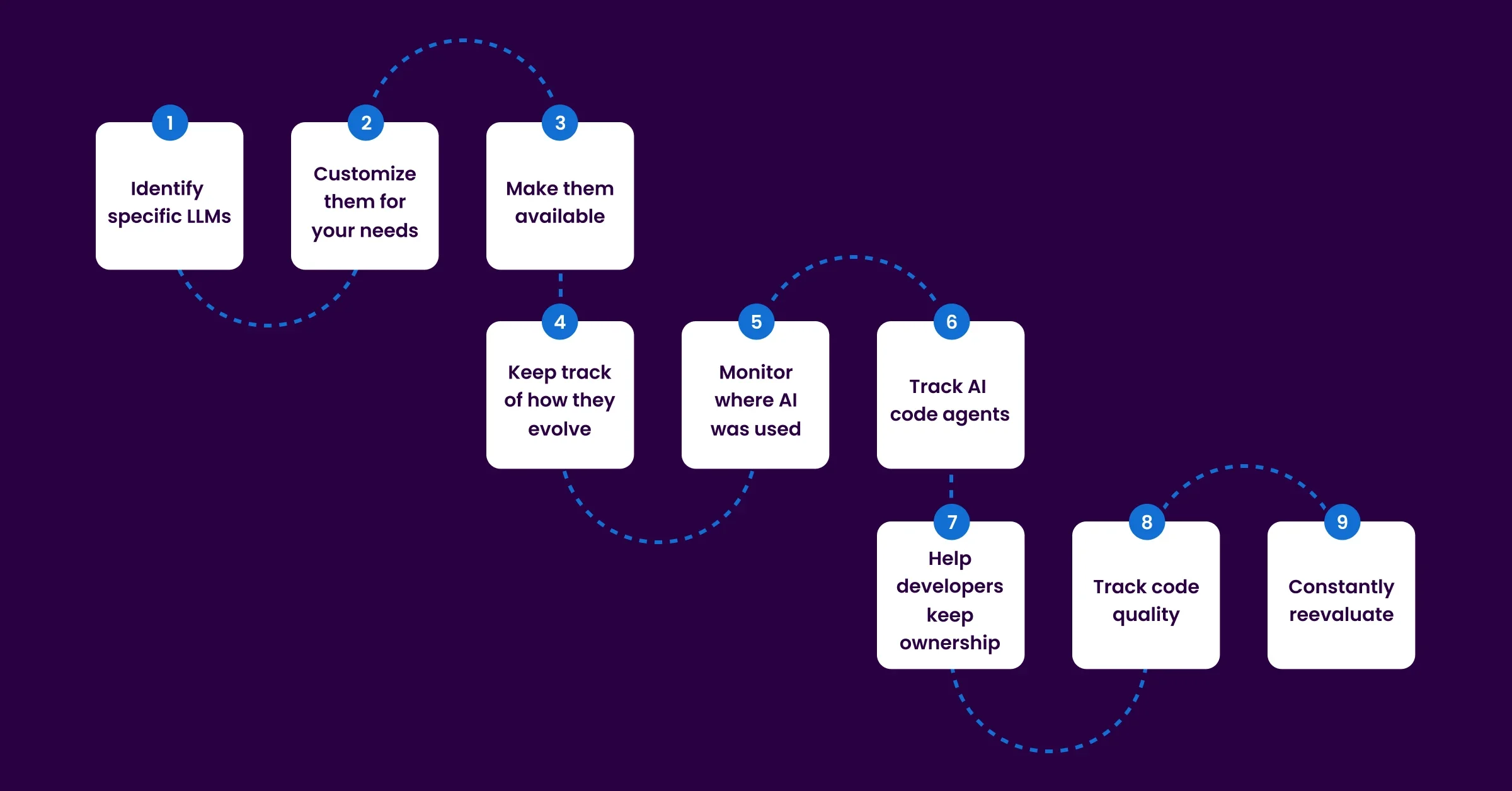

Identify specific LLMs

Carefully evaluate which specific LLMs are suitable for your developers to use, considering factors like domain relevance, security, and compliance. This step ensures that only trusted models are integrated into the workflow, reducing potential risks associated with their implementation.

Customize them for your needs

Tailor each approved LLM to align with your specific requirements, ensuring the models fit seamlessly into existing workflows and address the right challenges. Customizations can include fine-tuning the LLMs on proprietary data, adjusting them for industry-specific use cases, or optimizing performance for certain tasks.

Make them available

Integrating approved LLMs directly into developer workspaces, such as IDEs and DevOps platforms, ensures that these tools are readily accessible during the development process. This seamless availability allows developers to leverage the right LLMs for tasks like code generation, bug fixing, and optimization without leaving their familiar environments.

Keep track of how they evolve

Closely monitor changes to the LLMs in use to ensure compatibility, stability, and security. This tracking allows teams to assess how updates or modifications to the LLMs are impacting existing projects and workflows. By keeping a detailed record of version changes, organizations can mitigate potential risks associated with unexpected changes in performance.

Monitor where AI was used

Whenever a developer utilizes an approved LLM, automatically tag any resulting code change as AI-assisted and log the specific LLM and version used. This tagging provides transparency, allowing teams to track which parts of the code were influenced by AI tools and ensuring accountability in the development process. Additionally, recording the LLM version used helps maintain a clear audit trail, making it easier to trace potential issues back to their source.

Track AI code agents

Similarly, when an autonomous agent commits code generated by AI, tag the commit as AI-generated. By marking these commits, organizations can maintain accountability, track the performance of autonomous agents, and assess the impact of AI-generated contributions on the overall codebase.

Help developers keep ownership

Equip developers with tools to validate and review AI-generated code. These tools should help developers thoroughly assess the code, understand its functionality, and verify that it meets project requirements. Developers can then confidently integrate the code into the larger system while maintaining control and accountability over the final product.

Track code quality

Evaluate the performance and quality of code produced by different LLMs to benchmark them against one another. By regularly measuring code quality metrics associated with software reliability, security, and maintainability, organizations can track how the performance and reliability of each model evolves over time.

Constantly reevaluate

Implement comprehensive reporting to audit LLM performance across the enterprise. Track key metrics such as code quality, defect rates, performance, and cost in order to extract valuable insights into how effectively LLMs are contributing to development efforts and whether they align with business goals. By regularly reviewing this data, organizations can ensure that the LLMs in use remain a strategic asset, helping to maintain high standards of productivity and efficiency.

How Sonar helps build trust in AI code

Sonar has a long history of enabling organizations of all sizes to build and improve their code quality, so we have a good basis of experience we can use to attack these challenges.

Sonar can be an indispensable resource for developers during the review process for AI generated code. Our code quality tools highlight common issues that come up when code is created with an AI, and make sure that developers don’t miss issues hidden in code that they didn’t author themselves. This validation step helps enable developers to take back ownership of the code generated by LLMs.

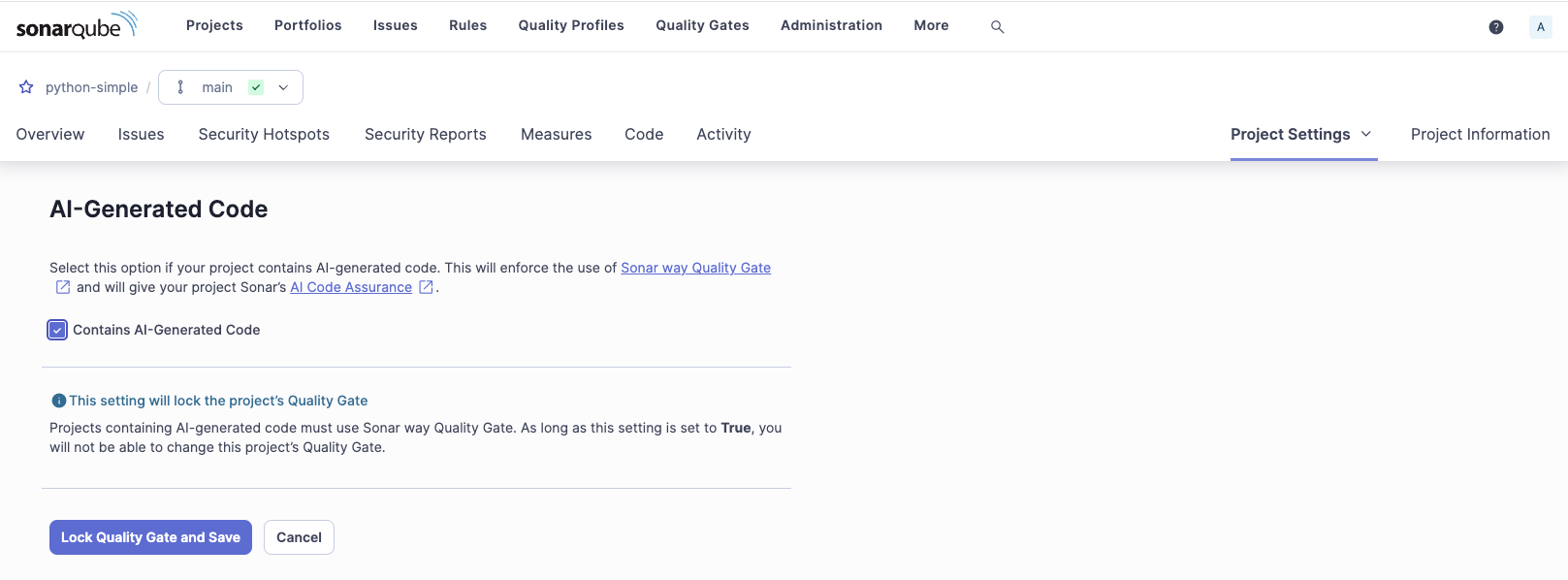

Sonar AI Code Assurance allows project owners to tag their AI code so it goes through an AI-specific validation process.

For stakeholders, Sonar can collect and report on all the accountability data being generated above. Sonar’s AI Code Assurance feature is a first step towards building out our capabilities here. When combined with data from other tooling that measures the performance of different AI models, organizations will be able to view real-time data on how different models perform. These measures will, over time, build trust in certain models over others, and help organizations make data-driven decisions on where to invest their resources.

As AI becomes more integrated into the SDLC, maintaining trust in your codebase requires new strategies and tools. By carefully selecting, customizing, and monitoring LLMs, organizations can harness the productivity benefits of AI without sacrificing code quality or accountability. With solutions like Sonar's AI Code Assurance, developers can validate AI-generated code, while stakeholders can track performance metrics to make informed decisions. By adopting these practices, enterprises can confidently navigate the evolving landscape of AI-driven development while ensuring their code remains secure, reliable, and trustworthy.

If you’re interested in exploring how Sonar can help your organization build trust in AI-generated code, contact us for a demo!