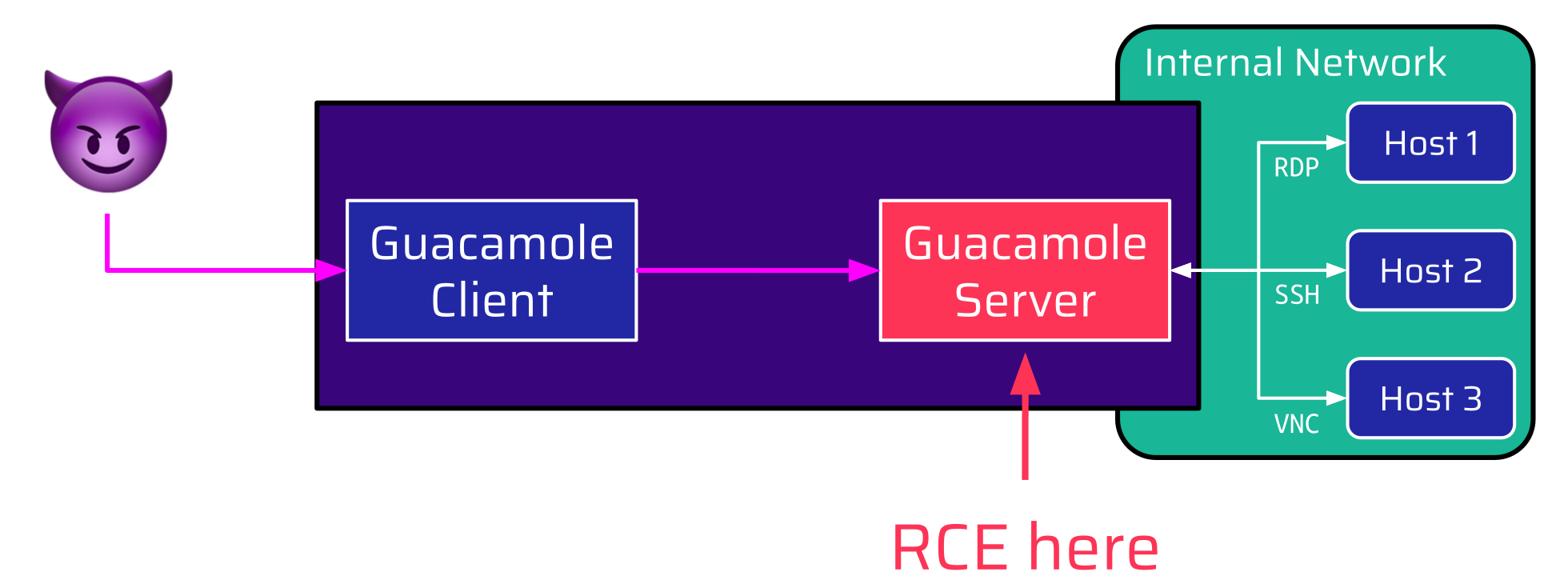

This is the second article in our blog post series covering two critical vulnerabilities in the remote desktop gateway Apache Guacamole. Guacamole allows users to access remote machines via a web browser. The Guacamole gateway is usually the only externally accessible instance, granting access to remote machines isolated in an organization’s internal network.

In the first article, we explained how Guacamole’s interesting architecture connects a Java component with a C backend server and how both of these components slightly disagree about their communication, introducing a parser differential vulnerability (CVE-2023-30575).

In this article, we will see that the requirement of high parallelism to serve and share hundreds of connections at the same time makes an application like Guacamole also prone to concurrency issues. We will dive into the world of glibc heap exploitation and ultimately gain remote code execution.

We also presented the content of this blog post at Hexacon23. A recording of the talk can be found here: YouTube: HEXACON2023 - An Avocado Nightmare by Stefan Schiller.

Parallelism

Parallelism has been around for decades but is still a source of severe security vulnerabilities nowadays. Doing things simultaneously on its own is not really a problem. If each worker is working on a self-contained task that is independent of another worker’s task, there are no issues with concurrency. The challenges arise when the same resource needs to be accessed simultaneously.

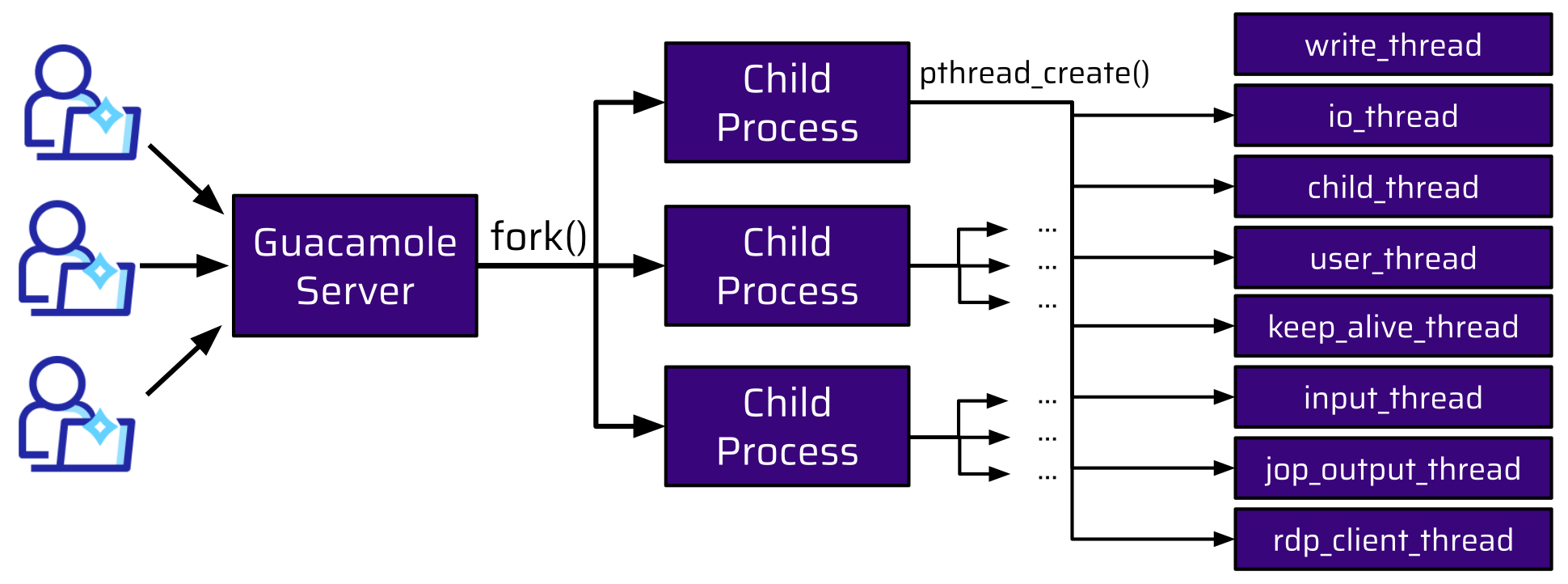

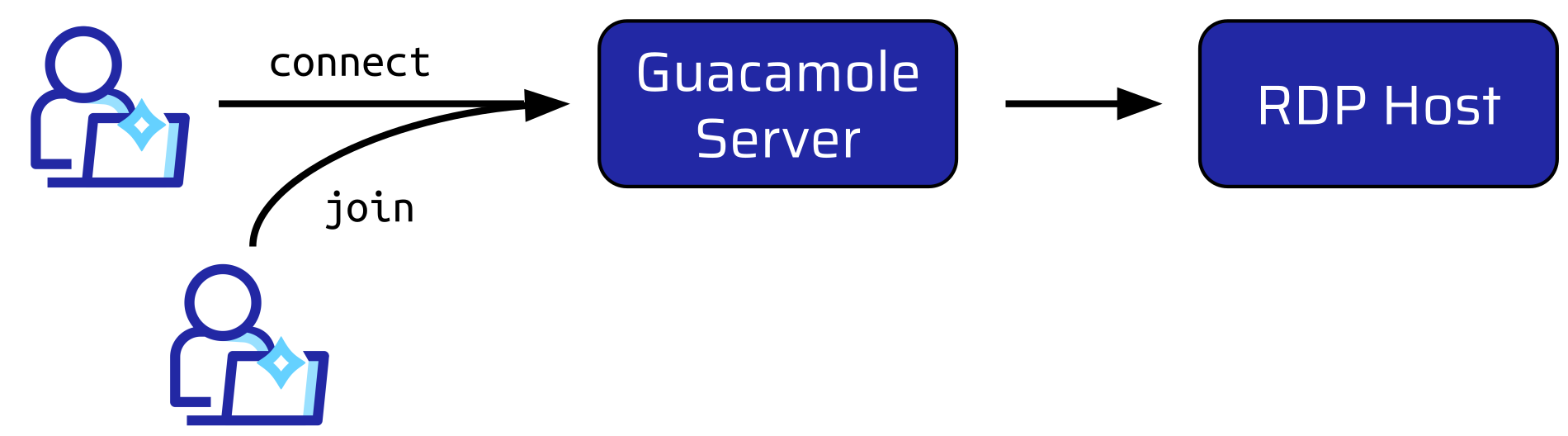

So, how many workers does Guacamole employ? When a user connects to the Guacamole Server, the main process forks a new child process. When another user connects, a new child process is forked. This is done for every new connection:

Each of these child processes is responsible for:

- handling the user connection,

- initiating and maintaining the connection to the internal host,

- communicating with the parent process,

- and so forth.

All of this has to be done simultaneously. This means there are a lot of threads. The threads shown in the graphic above are just a few of them for each forked child process.

Based on this observation, we decided to spend some time looking for concurrency issues. In general, everything seemed pretty solid. All threads are loosely coupled, and mutexes are used to ensure exclusive access to shared resources.

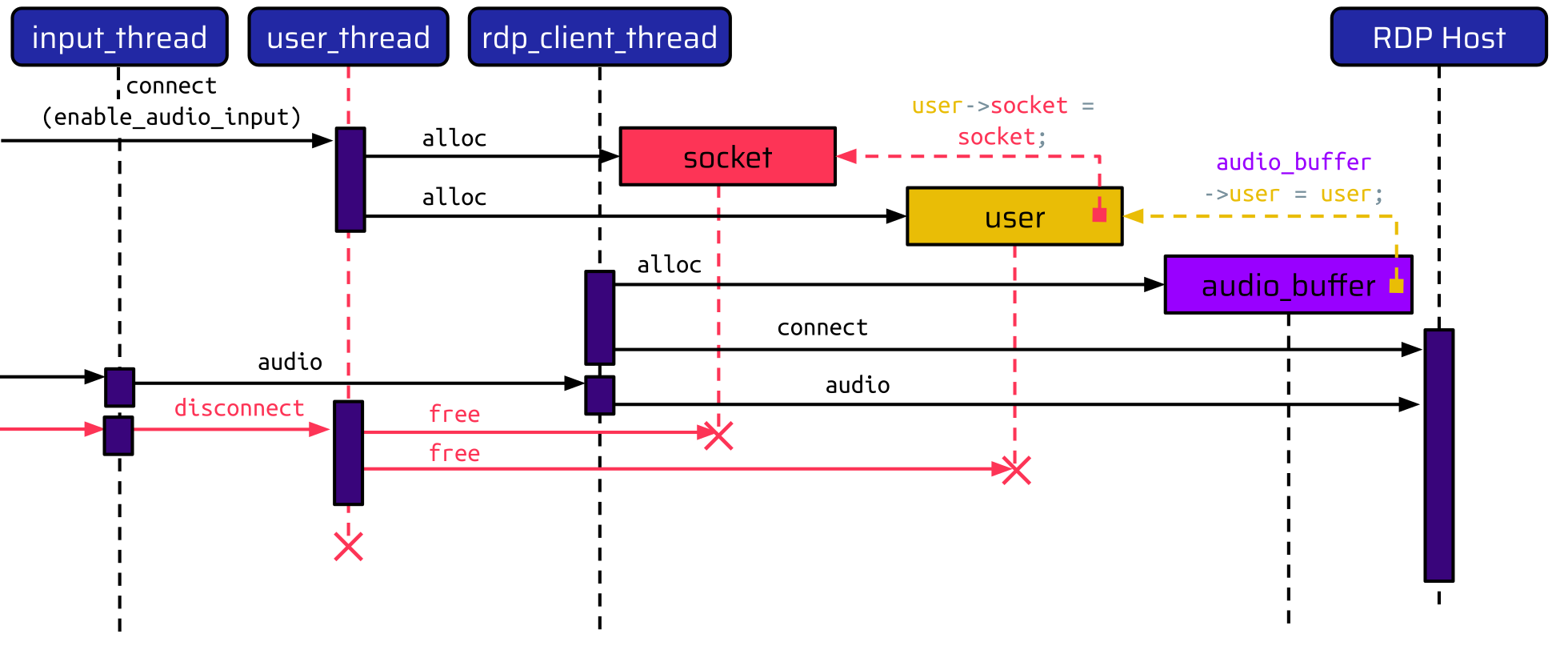

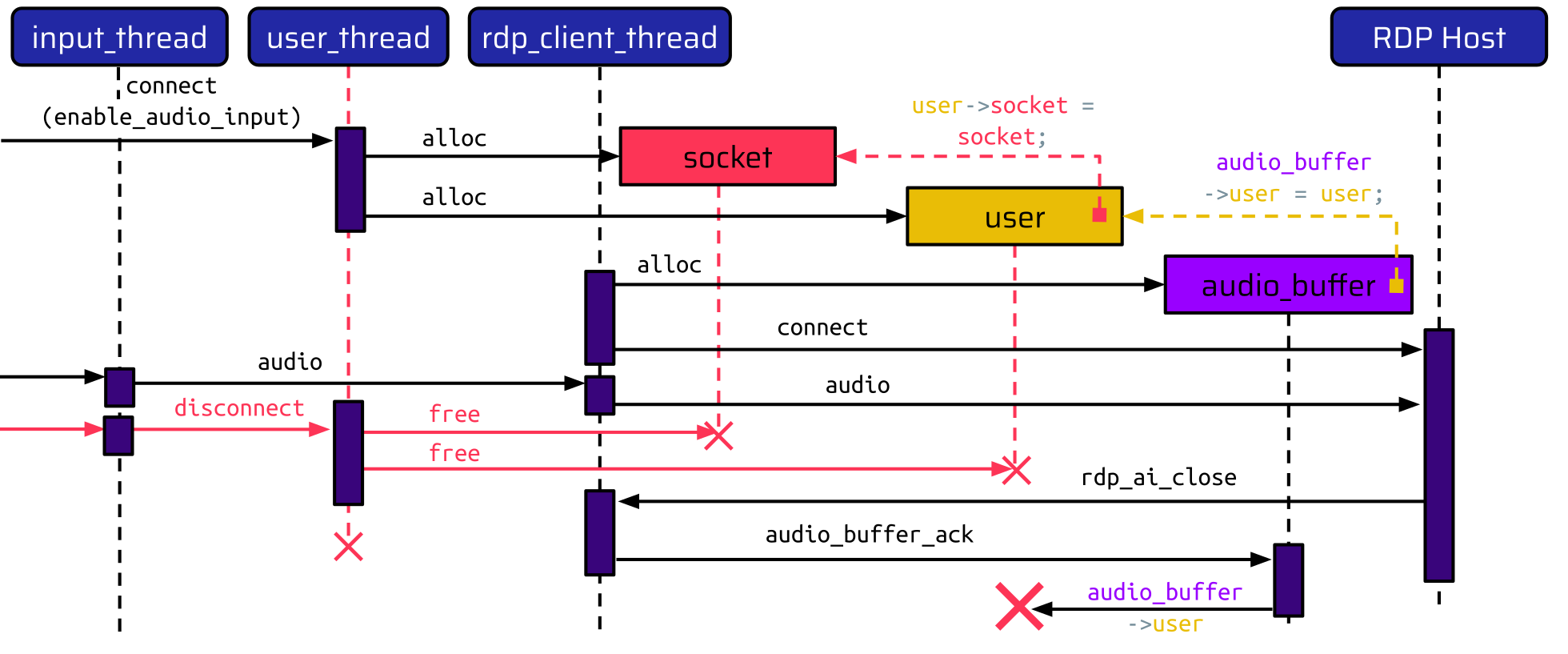

However, the audio input feature looked very interesting. This feature allows a user to transmit audio data from a locally attached microphone to the Guacamole Server, which is then forwarded to the RDP host. Let’s have a look at the related RDP connection flow:

There are three threads involved here:

- The

input_thread, - the

user_thread, and, - the

rdp_client_thread.

The user_thread handles the initial connect instruction and allocates two data structures: one for the socket and one for the user. The user holds a pointer to the socket allocated before. After this, the RPD connection can be initiated using the rdp_client_thread. When enable_audio_input is set to true, this thread allocates an additional audio buffer, which holds a pointer to the user. Thus, there is a pointer chain from the audio buffer - to the user - to the socket.

After all data structures have been created, the rdp_client_thread establishes the RDP connection to the internal host. If the user now speaks into their microphone, the input_thread receives an audio instruction, which is translated to the corresponding RDP audio message and transmitted to the RDP host. If the user now decides to disable remote audio on the RDP host by selecting the checkbox shown in the above animation, the RDP host sends an audio input close message, which is handled by the rdp_client_thread. The rdp_client_thread acknowledges this message by calling the audio_buffer_ack function. This function only has access to the message and the audio buffer. In order to send the acknowledgment message, it needs to retrieve the socket. Thus, it traverses the pointers from the audio buffer to the user and to the socket. The first function being called on the socket is its lock_handler function.

Audio Buffer Use-After-Free

What could possibly go wrong here?

Let’s assume the RDP connection is established, and the user has already spoken into their microphone to transmit some audio data. Now, the user decides to disconnect from the session:

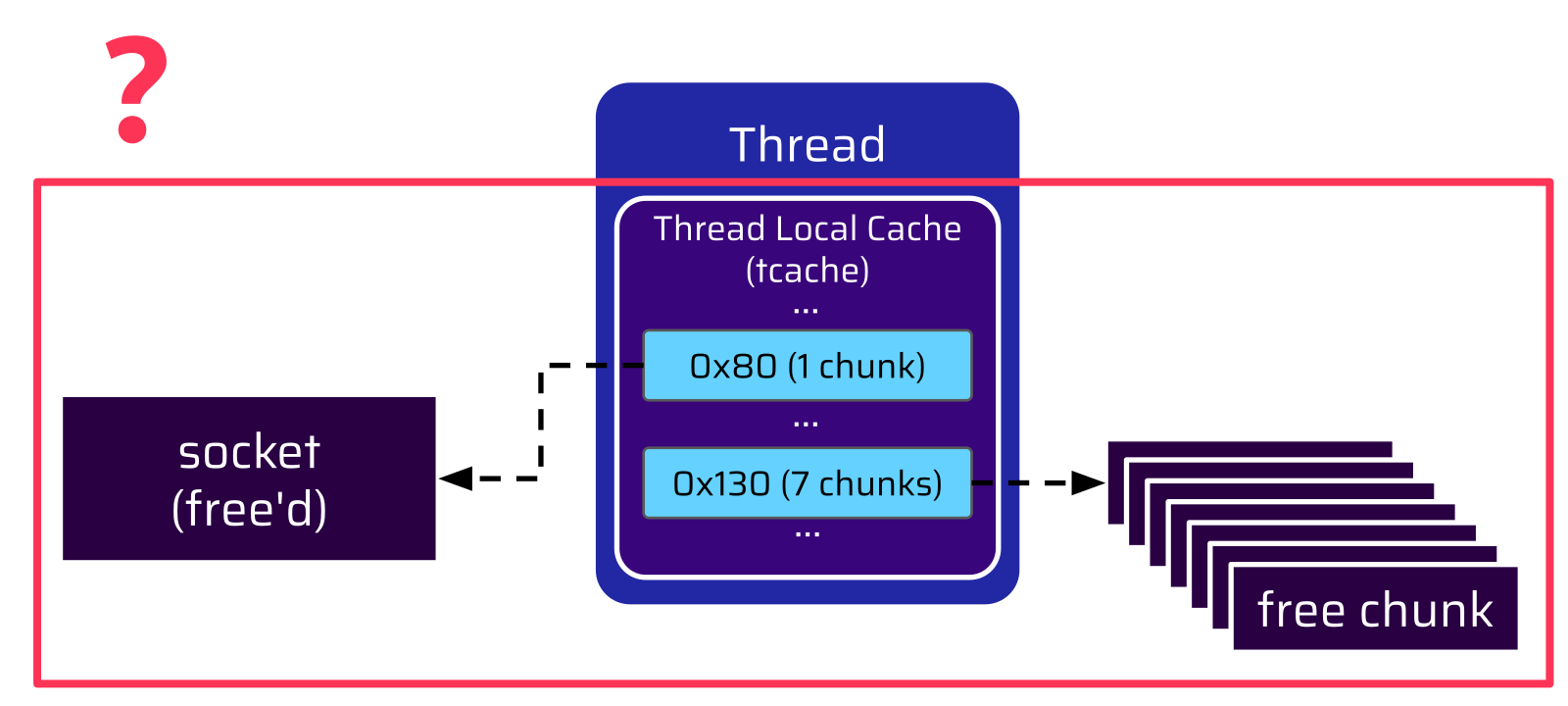

This tears down the user_thread, which frees the memory allocated for the socket and the user. The rdp_client_thread, responsible for handling the RDP connection, is still running, though, and is waiting for messages from the RDP server. So, if the RDP server now sends a message to close the audio channel, the rdp_client_thread tries to send the acknowledgment message:

Thus, it accesses the user pointer in the audio buffer, which is already a dangling pointer. So if the user disconnects first, and then the RDP host closes the audio input, we have a classical Use-After-Free vulnerability (CVE-2023-30576).

This vulnerability can be triggered with 100% reliability by making the Guacamole Server connect to an XRDP server via the Guacamole protocol injection and then disconnect from the session. Unlike a Windows RDP server, XRDP explicitly closes the audio input channel before the connection is terminated, which is sufficient to trigger the vulnerability.

Use-After-Free Exploit - Classical Approach

Let’s determine how an attacker could exploit this vulnerability. We start by looking at how such a Use-After-Free vulnerability would usually be exploited.

Once the user disconnects, the user and socket are freed. At this point, there are actually two dangling pointers: The first one is the user pointer in the audio buffer, and the second one is the socket pointer of the already freed user. The most straightforward approach is to reallocate the freed socket and populate the lock_handler function pointer with some address the attacker wants to call:

Once the RDP host closes the audio channel:

- The user pointer in the audio buffer is referenced,

- the socket pointer in the user is referenced, and

- the attacker-controllable

lock_handlerfunction is called.

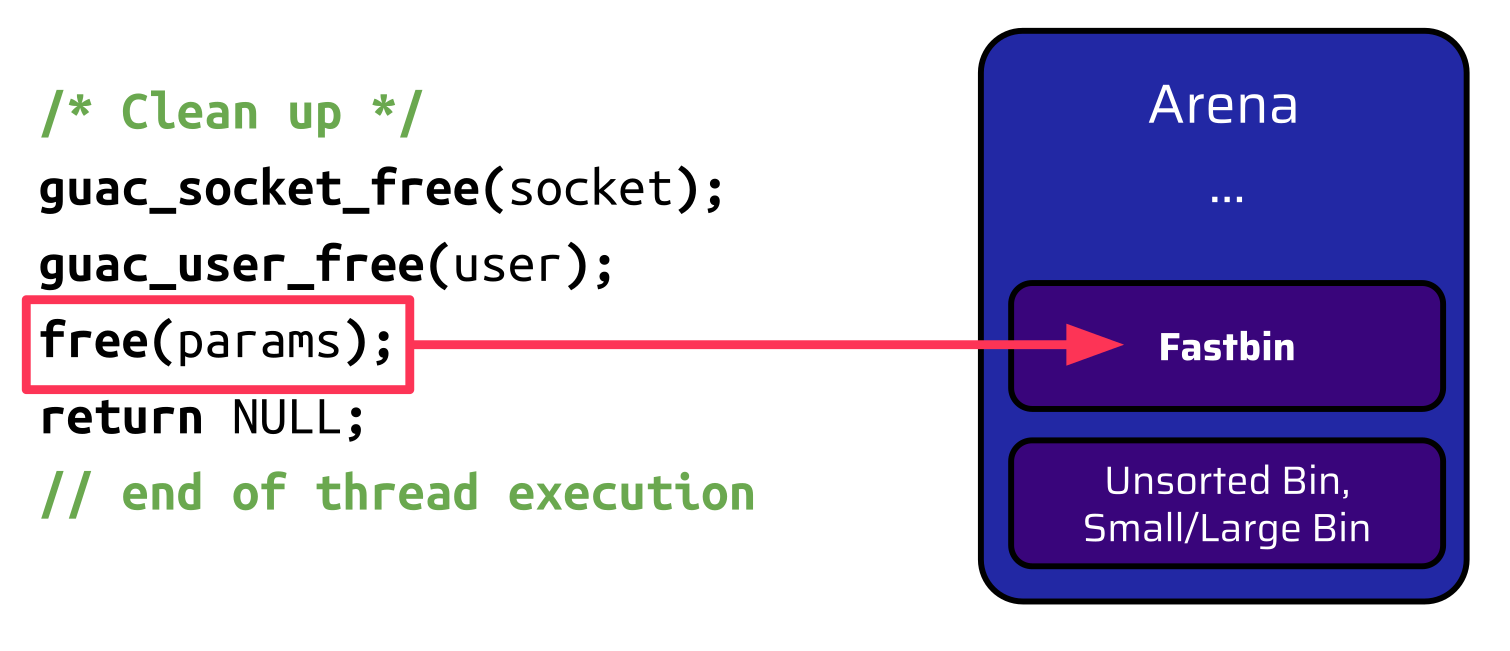

Theoretically, this is very easy. But here is the first challenge: The chunks are freed right before the thread ends its execution. It is not possible to do any reallocation in this thread:

/* Clean up */

guac_socket_free(socket); // <-- socket freed

guac_user_free(user); // <-- user freed

free(params);

{% mark red %}return NULL;

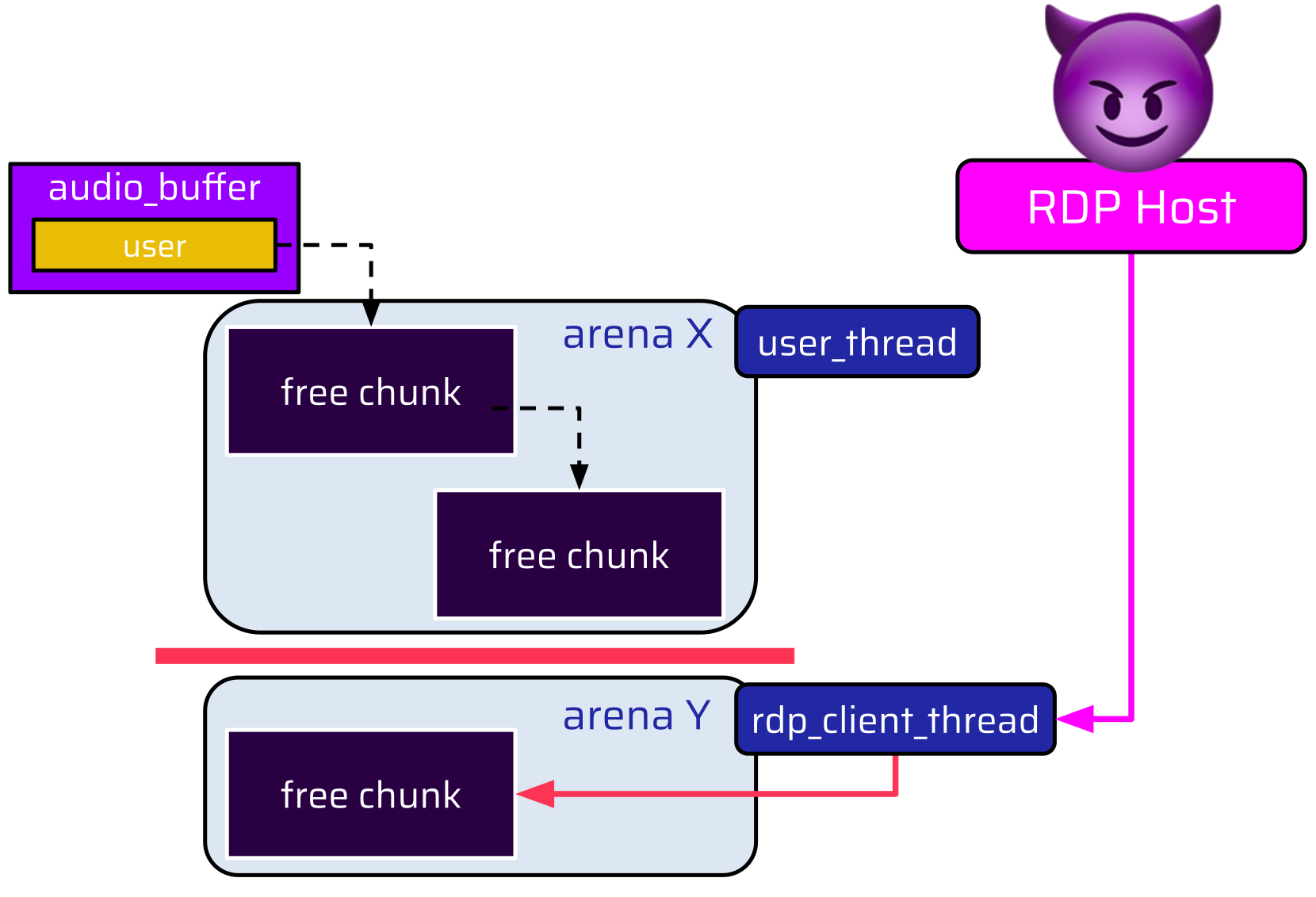

// end of thread execution{% mark %}It would be possible to do an allocation from the RDP connection, which is handled by the rdp_client_thread and is still alive, but since this is another thread, it is also attached to a different arena. Every allocation an attacker could make via the rdp_client_thread is served from this arena (arena Y):

Accordingly, this classical reallocation approach doesn’t seem to work. We need to come up with something else.

Use-After-Free Exploit - Without Reallocation

An alternative approach is to exploit the Use-After-Free vulnerability without any reallocation by leveraging the glibc’s heap internals.

When a chunk like the socket data structure is freed, there are basically three possibilities:

- The chunk is put into a tcache bin,

- the chunk is put into a bin of its arena, or,

- the chunk is merged with the surrounding chunks.

Because of its size, the socket is suitable for the 0x80 tcache bin. Thus, the head pointer of this tcache bin is updated to reference the now free chunk. At next, the user is freed. Its size is 0x130 bytes, and the corresponding tcache bin is empty. Hence, it is added to this bin:

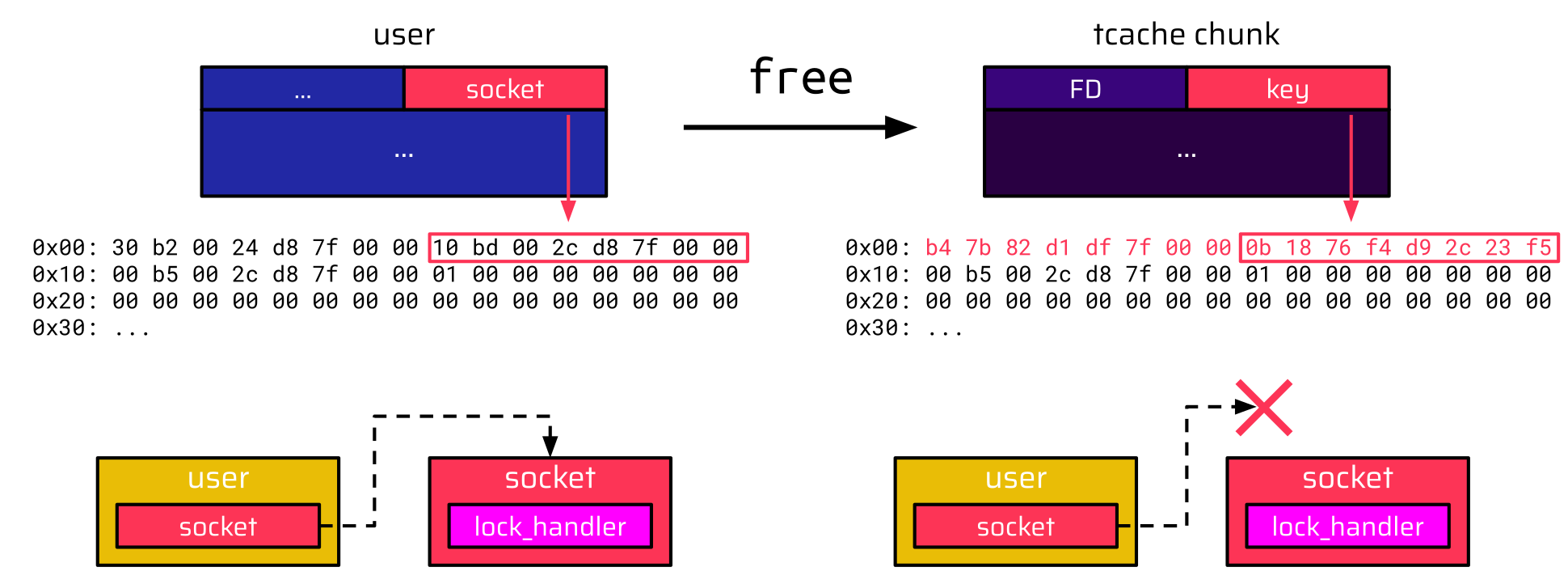

Let’s have a closer look at what actually happens when the user is freed and put into the tcache bin. We are particularly interested in the user socket pointer. This pointer is located at offset 8, as shown in the below image, and references the socket. When the user is freed, it becomes a chunk in the tcache bin. Thus, the first 8 bytes are populated with the forward pointer (FD) of the singly linked list. However, the next 8 bytes are also populated with the tcache key. This results in the socket pointer being overwritten and corrupted:

Even if we could control the lock_handler function pointer in the socket, the socket would not be referenced anymore. Again, this approach feels like a dead-end.

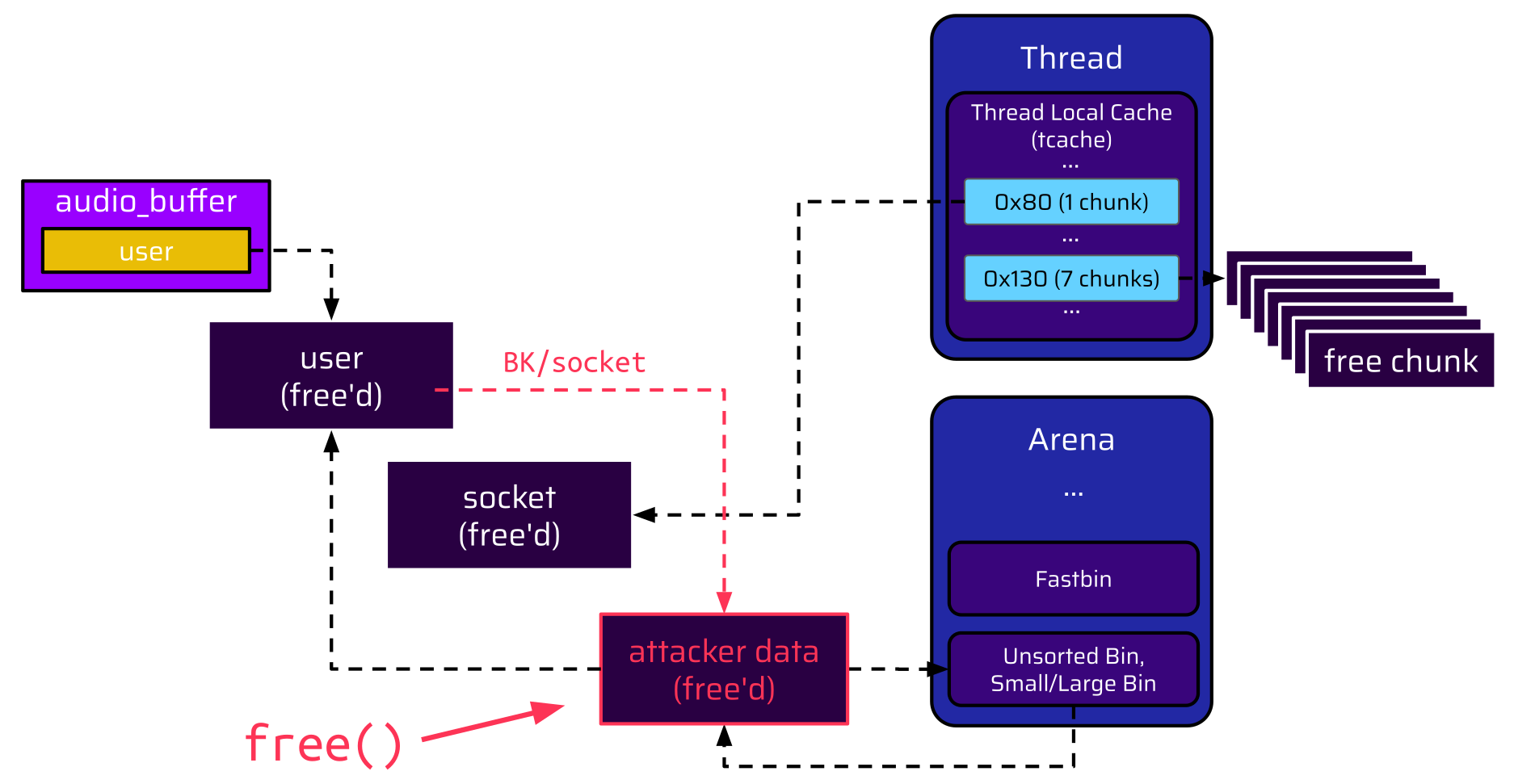

However, an attacker can prevent the user from ending up in the tcache bin with some preparation before the user is freed. Since every tcache bin is by default limited to 7 entries, the attacker can make the application allocate and free seven chunks with this size before disconnecting. This exhausts the 0x130 tcache bin, and thus the user cannot be put here. Due to the size of the user, it is also not suitable for a fastbin and ends up in the unsorted bin of its arena:

Let’s have a closer look at what exactly is happening when the user is put into the unsorted bin instead of a tcache bin.

Again, before the user is freed, it holds a pointer to the socket at offset 8, as shown in the below image. Once the user is freed, it is put into the unsorted bin. Because this bin is doubly linked, a backward pointer (BK) is inserted at offset 8. This, again, overwrites the socket pointer, but now the socket pointer is a valid pointer, which references the unsorted bin in the arena:

The memory the socket pointer is referencing (the unsorted bin) cannot be directly controlled. An attacker would like to place a valid socket there, where the lock_handler function pointer can be set to an arbitrary address, but this memory in the arena only holds pointers to heap chunks. If one of these were interpreted as a function pointer, this would cause an immediate segmentation fault since heap chunks are marked as non-executable (NX):

However, if an attacker could free another chunk to the unsorted bin, it would be placed at the head of the doubly linked list. Thus, the backward pointer (BK) of the freed user chunk would point to this free chunk:

This raises the question: Is it possible to free another chunk after the user is freed?

And, as you may remember, there is actually another free call right before the thread terminates.

But, due to its size, this chunk is placed in the tcache bin or fastbin:

Thus, the thread ends its execution and it’s game over.

But then we were wondering: Since the thread terminates… What is actually happening to its tcache data structure?

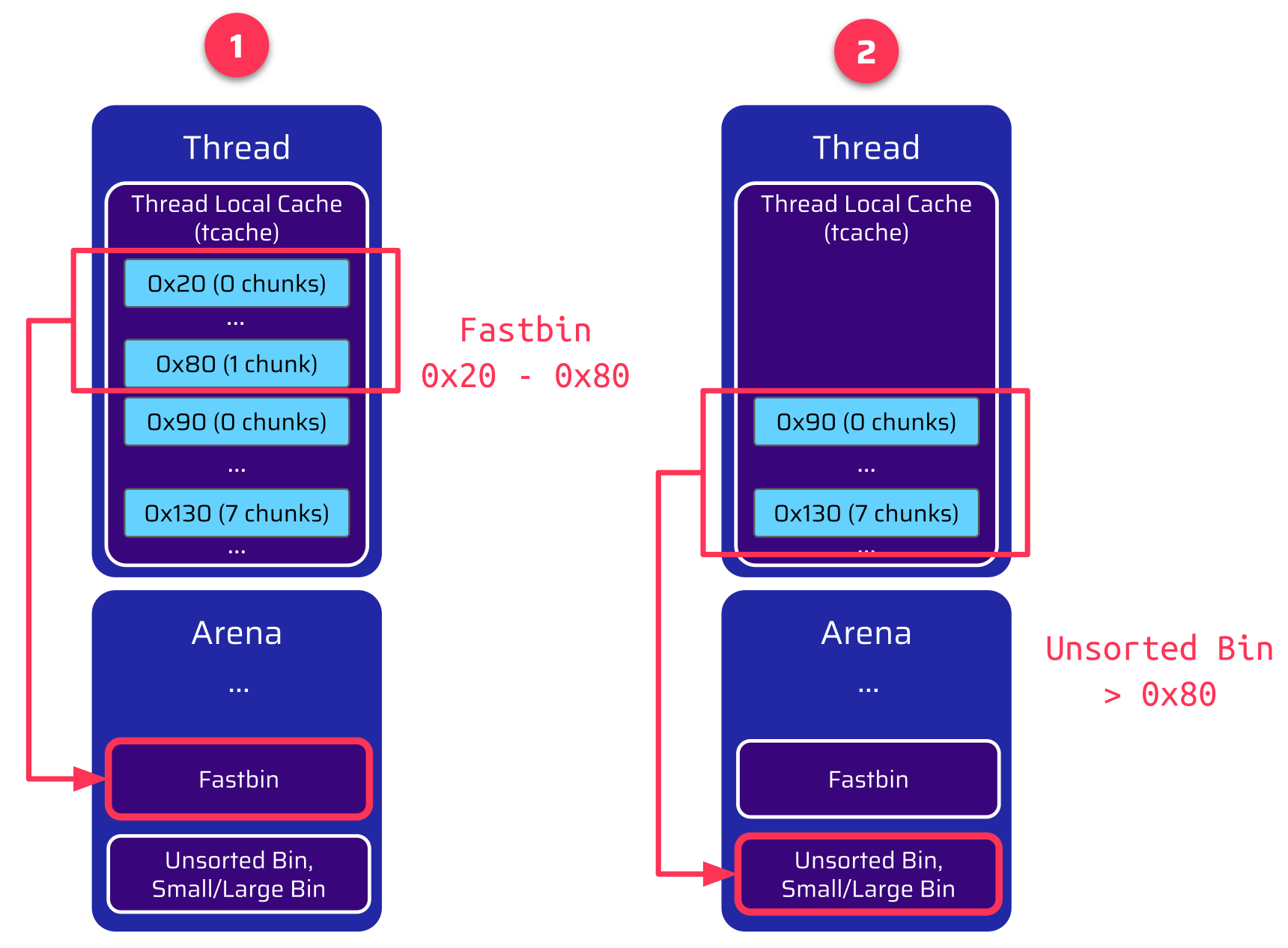

The answer to this can be found in the glibc source code. When a thread is terminated, the function tcache_thread_shutdown is executed. This function frees all chunks in the tcache (e) and the tcache data structure (tcache_tmp) itself back to its arena again:

static void tcache_thread_shutdown (void) {

// ...

/* Free all of the entries and the tcache itself back to the arena heap for coalescing. */

for (i = 0; i < TCACHE_MAX_BINS; ++i) {

while (tcache_tmp->entries[i]) {

tcache_entry *e = tcache_tmp->entries[i];

// ...

tcache_tmp->entries[i] = REVEAL_PTR (e->next);

__libc_free (e);

}

}

__libc_free (tcache_tmp);

}This is done sequentially: At first, all chunks within the size range of 0x20 up to 0x80 are transferred to the fastbin of the arena. Next, chunks bigger than 0x80 are put into the unsorted bin of the arena:

And here is the window of opportunity for an attacker. The first tcache bin suitable for the unsorted bin is the size 0x90 tcache bin. When the thread is terminated, this bin is empty by default. If an attacker had made the application allocate and free a chunk of size 0x90 beforehand, it would have been placed into this tcache bin. This is now the first chunk, which is transferred to the unsorted bin during the thread shutdown.

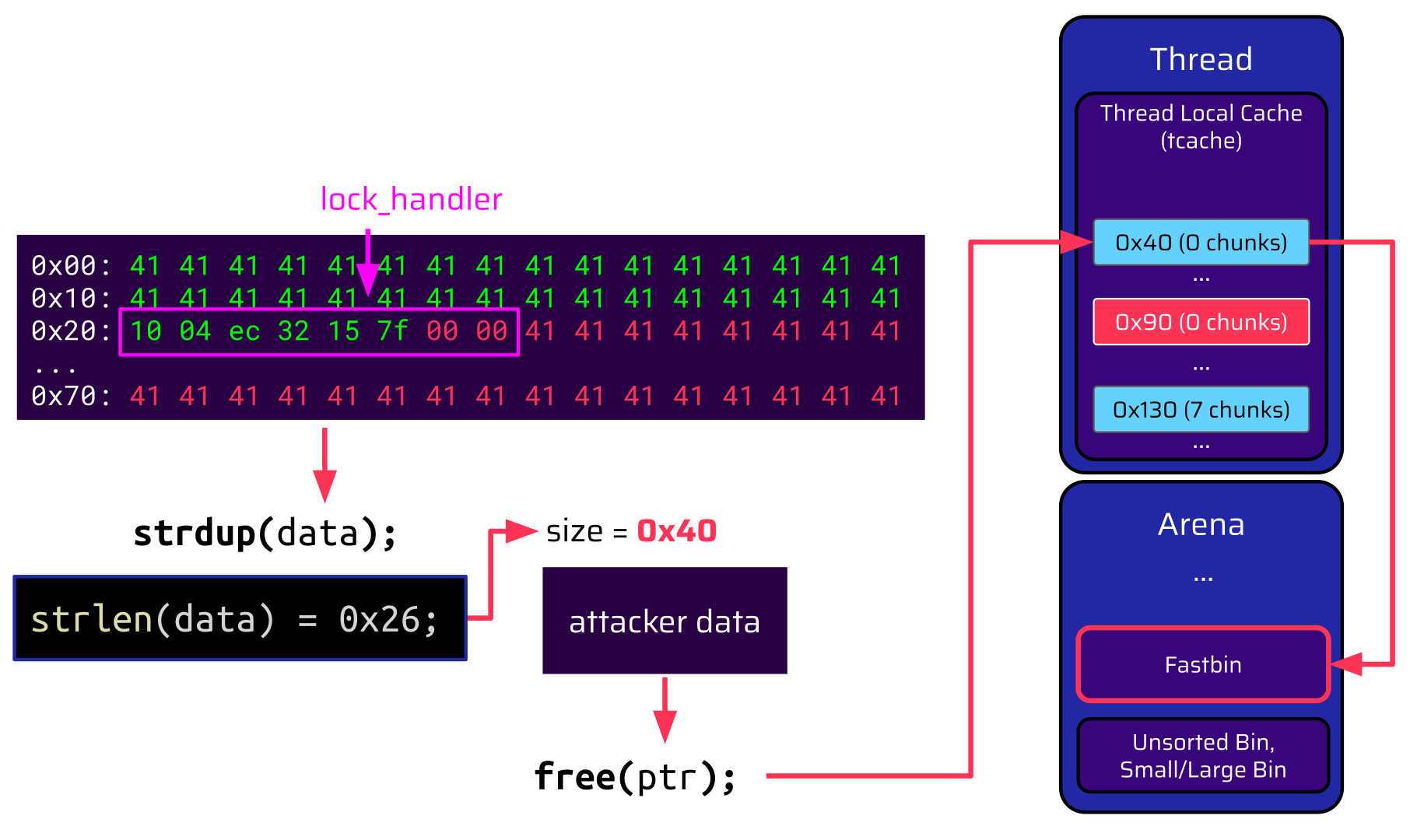

Since this chunk is added to the unsorted bin right after the freed user structure, the backward pointer (BK), which overlaps with the socket pointer, now references this chunk with the attacker data. Therefore, the attacker created a valid data structure:

When the audio channel is closed, the fake socket is referenced through the heap metadata backward pointer (BK), and the attacker-controlled lock_handler function pointer is called. Once the lock_handler function is called, the application raises a segmentation fault verifying the instruction pointer control.

However, there is a little problem with this approach. For all of this to work, the attacker needs to craft some data, which is freed to the 0x90 tcache bin. The only available primitive to do this is calling strdup to copy the attacker-controlled data from a static buffer to the heap:

If the attacker wants to populate the lock_handler function pointer with a gadget address, this address contains null bytes. strdup will only copy the data until the first null byte. This on its own is not a problem because the attacker only has a one-shot gadget anyway, but the size of the allocated chunk is now far less. That means if the chunk is freed, it is put into the 0x40 tcache bin instead of the 0x90 tcache bin. When the thread terminates, this tcache bin is not transferred to the unsorted bin but to the fastbin.

Although the technique of leveraging the heap metadata and internal processing of chunks to craft valid data structures might be applicable in certain situations, we are missing one single primitive for this here. So, back to the drawing board. Could there be another way to leverage reallocation?

Use-After-Free Exploit - With Reallocation?

Let’s reconsider the problem with the classical reallocation approach.

We have a lot of threads. All of these threads are assigned to a different arena. When the connection is terminated, the user and socket are freed by the user_thread and returned to the arena this thread is attached to (arena 4). An attacker could still make allocations, but only from the rdp_client_thread, which is attached to a different arena (arena 8):

At this point, a feature called connection sharing turned out to be very useful. Connection sharing means that somebody else can join an existing session:

In fact, somebody else doesn’t need to be somebody else. It can also be the same user who initiated the connection.

Once a user joins their own connection, no new child process is forked. The existing child process responsible for this connection just creates more threads to handle the shared connection. And there is basically no limit on how often you can join your own connection. This means that an attacker can create a lot of threads. And all of these threads also need an arena. But the amount of arenas is limited. This limit depends on the number of CPU cores. For 32-bit systems, the limit is twice the number of cores, and on 64-bit systems, it is eight times the number of cores:

Let’s assume that we have only one core on a 64-bit system. This means we have already reached the limit of 8 arenas, and for additionally created threads, the existing arenas are repurposed:

With the example shown above, one of the io_threads is also assigned arena 4. However, an attacker cannot control any allocations from this thread. The thread an attacker can control for shared connections is the input_thread. Hence, the attacker wants this thread to be assigned to arena 4. In order to do so, the attacker can just join the connection again and create more threads. Eventually, this succeeds, and the input_thread is assigned to the existing arena 4.

The allocation primitive available from this thread also allows an attacker to include null bytes. Since it is attached to the same arena as the user_thread, it is now possible to reallocate the freed socket and user and fully control the instruction pointer this time.

Use-After-Free Exploit - ROP Chain

With this solid instruction pointer control, an attacker is now able to trigger an ROP chain to ultimately gain code execution.

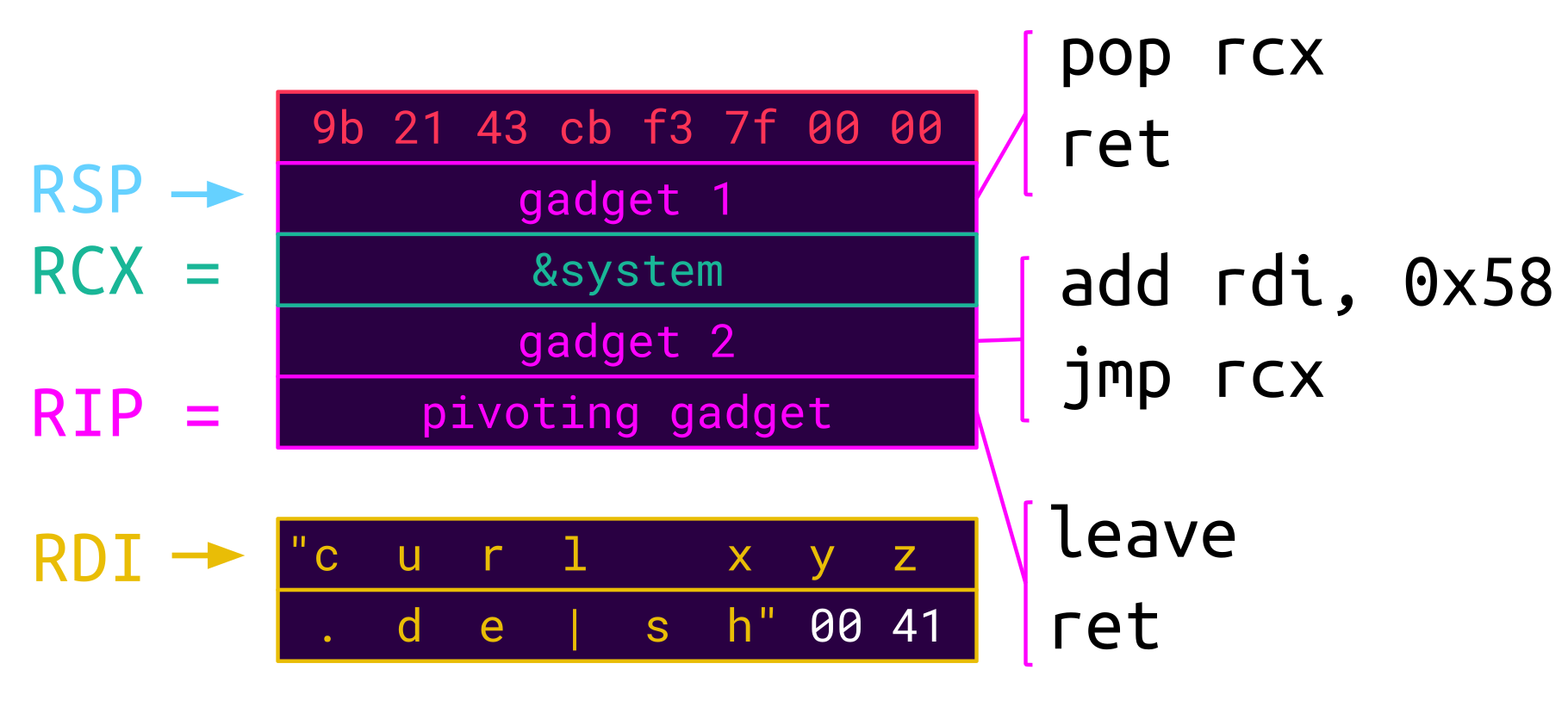

The exploit conditions of the vulnerability are in favor of an attacker. The lock_handler function pointer is called with the socket itself passed as an argument. One disadvantage of the allocation primitive for a shared connection is that the allocated data is immediately freed again. Although an attacker can make it end up in a fastbin, the first 8 bytes are still messed up by the inserted forward pointer. But this can be overcome with a leave;ret pivoting gadget. This gadget sets the stack pointer (RSP) to offset 8, where we can store more gadgets:

The first gadget loads the address of system to RCX, and the second gadget offsets RDI to the command string to be executed and jumps to system. Thus system is executed with the command string provided as its first argument.

At this point, the attacker has gained code execution on the Guacamole Server via the externally exposed Guacamole Client:

This great Check Point Research article on Apache Guacamole by Eyal Itkin covers a different attack vector and comprehensively describes the impact of gaining remote code execution on the Guacamole Server, which allows an attacker to:

- Harvest more credentials,

- spy on every connection, or

- just pivot to the hosts in the internal network.

Summary

In this second article, we have seen that the requirement of high parallelism makes an application like Guacamole prone to concurrency issues. We have detailed a critical Use-After-Free vulnerability in the audio input feature and detailed different glibc heap exploitation techniques, which could be leveraged by an attacker to turn this vulnerability into arbitrary code execution.

Parallelism has been around for decades but is still a source of severe security vulnerabilities nowadays. We have seen that an attacker might not be required to reallocate a freed chunk to exploit a Use-After-Free vulnerability if the heap metadata can be leveraged to craft valid data structures. Of course, this is dependent on the specific scenario, but it is definitely something to keep in mind. Furthermore, we have seen that the arena separation can be overcome by spawning a lot of threads.

At last, we would like to thank the Guacamole maintainers for quickly responding to our report and providing a comprehensive patch!

Related Blog Posts

- Code Interoperability: The Hazards of Technological Variety

- Highlights from Hexacon 2023

- Patches, Collisions, and Root Shells: A Pwn2Own Adventure